Aws Cli Download S3 File

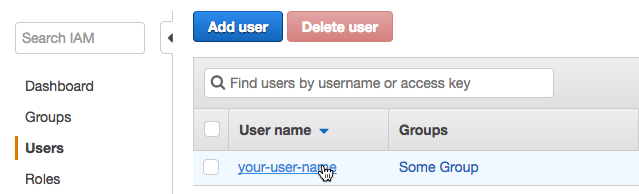

Apr 19, 2013 - It turns out that the behaviour was due to a bug, which has since been fixed. Jump to Configure S3 CLI credentials - The AWS CLI stores the credentials it will use in the file ~/.aws/credentials. If this is already setup for the copy_user.

Track tasks and feature requests

Join 36 million developers who use GitHub issues to help identify, assign, and keep track of the features and bug fixes your projects need.

Sign up for free See pricing for teams and enterprisesAws Cli Download S3 File To Pdf

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

commented Sep 30, 2016

I'm getting an error on Windows trying to CLIBucket StructureCommandThe same exact error occurs when I use Any idea what I am doing wrong? There are no other files in my bucket or sub-folder. |

changed the titleaws cp [Errno 2] No such file or directorySep 30, 2016

referenced this issue Oct 1, 2016

Closedchanged behavior: aws s3 cp --recursive yields error status code even when all files were copied #2214

commented Oct 1, 2016

This issue remains a problem in: 1.11.0 |

commented Oct 4, 2016

@mariotacke So this is the part that looks a little fishy Could you run on a list objects on that key? I have a feeling the key is of non-zero size. If it is of non-zero size, the reason you are seeing this is because when a file ends with If this is not the case, could you provide debug logs for the command you are running as I was not able to reproduce it? |

added the closing-soon-if-no-response label Oct 4, 2016

commented Oct 4, 2016

Interesting. Running with Right now I am using S3 Browser to upload files to my bucket. I created an empty folder on Windows, put the |

commented Oct 4, 2016 • edited

edited

I ran: Now it works fine. I wonder what I can do on Windows to prevent creating this unnecessary file during a folder upload? I just tested this on OSX with Cyberduck and it does not create the problematic file. I guess its the software I'm using on Windows. Creating the folder and file through the CLI and uploading it through the CLI does not create the problematic file. |

commented Oct 5, 2016

So are using the AWS Console and Windows to upload the directory with the file in it and can you reproduce this every time? Usually when you click the create directory button in the AWS Console, the console will usually create an empty object ending with |

commented Oct 6, 2016

Sorry, that was a misunderstanding: when I use the CLI everything works fine (upload, sync/cp). Previously I used this software S3 Browser which seems to create a non-zero file for any uploaded directory from Windows. On OSX, I use Cyberduck which does not cause a problem. My issue is solved by not using the S3 Browser software. With your help I was able to identify and remedy the issue. You can close the issue. Thanks! |

commented Oct 6, 2016

Sounds good. Glad you were able to figure things out. Closing. |

Aws Cli Download S3 File To Itunes

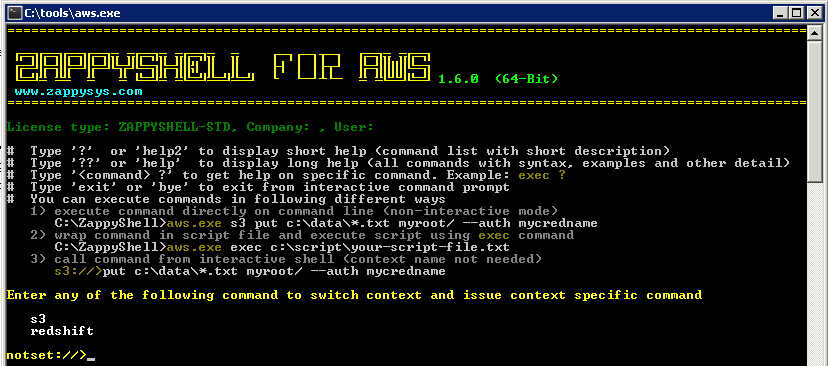

AWS CLI has made working with S3 very easy. Once you get AWS CLI installed you might ask “How do I start copying local files to S3?”

The syntax for copying files to/from S3 in AWS CLI is:aws s3 cp <source> <destination>

Aws Cli Download S3 File Download

The “source” and “destination” arguments can either be local paths or S3 locations. The three possible variations of this are:aws s3 cp <Local Path> <S3 URI>

aws s3 cp <S3 URI> <Local Path>

aws s3 cp <S3 URI> <S3 URI>

To copy all the files in a directory (local or S3) you must use the --recursive option. For more complex Linux type “globbing” functionality, you must use the --include and --exclude options. See this post for more details.

Here are a couple of simple examples of copying local files to S3: